Libby Kent

Container Days Boston 2015

Being new to the container space, it is a bit hard to grok all the different parts, how they worked together and how the space has evolved.

Terminology

Containers

Docker / Rocket

cgroups

mount

LXC - Linux container

OpenVZ

Virtual Machine

Overview

This will attempt to be an overview of what I learned at Containers Days Boston, a 2 day event where I listened to a bunch of knowledgeable speakers onsite, and in the bars, as well as attended a Docker workshop on day 2. I have been container curious for a while, and although I have worked with Virtual Machines and found vagrant to be invaluable when setting up environments or deploying to different environments, containers were more of a buzz word that I hoped to play with some day. I am all too familiar with logging on to a machine to find out that something has been set manually, or having to recreate a machine, but having no obvious way to do that apart from some outdated docs or using the `history` command. Containers to the rescue? I'm hoping so!

A Brief History Of Containers Jeff's slides

Jeff and Kir started out with the motivation and history behind all this virtualization: time sharing of expensive mainframe computers at universities. Jeff gave the Unix side of the story, and Kir gave the Linux (OpenVZ) side of the story.

Virtual Machines and Hypervisor

Virtual Machine (or guests) sit on top of a Hypervisor which can be either software or hardware that runs the VM.

Two types of hypervisors: Type-1: native or bare-metal hypervisors Type-2: hosted hypervisors VM must emulate the underlying hardware i.e. boot up drivers, have their own file system (not shared with host), etc. Virtual Machine (VMs) are slow to start up because they must set up an entire emulator for a machine. VMs were good ways for sandboxing. If one VM goes down, the other VMS remain running.

Fun fact: JVM was the first Virtual Machine that didn't emulate underlying hardware.

Levels Of Isolation From Strongest To Weakest

- Partitioning: best isolation, worst flexibility can't give a little more power.

- Virtual Machines: sit on top of a hypervisor, if the hypervisor fails they ALL fail.

- OS Virtualization: containers / zones (Solaris) collect processes unified by one namespace with access to an operation system KERNEL that it shares with other containers / zones.

Containers - Virtual Isolated Operating Systems

Containers in Linux / Unix are enabled by the ability to group processes together and add resource controls to that group. Resource limits (IO, CPU) can be set on a containers and apply to the entire group of processes that make up the container. Each container has its own group of processes, own isolated diskspace or namespace, own virtual network device (each container has its own IP address), own users / root, and mounting capabilities, i.e. shared drives can be mounted to the the group of processes.

In 2007 engineers at google started working on cgroups as a way to isolate and group a set of processes and set limits for all processes in a group. cgroups were accepted into the Linux kernel since 2.6.24 (2008). Before cgroups were accepted in the kernel only ulimit was available which could set resource limits fro ONE process, but this is not sufficient as applications require more than one process.

Since containers all run on top of the same Kernel, one must run "guests" or "Virtualized OS" that run on the the same kernel version.

Container Implementation

cgroups + namespaces for resource controls (I/O, RAM, CPU)

LXC (Linux Containers) - OS level virtualization for running multiple isolated Linux systems (containers) [wiki]

OpenVZ containers using cgroup + namespaces (openVZ team implemented namespace)

Solaris Containers (including Solaris Zones)

Advantages of Containers

- Lightweight when compared to VMs which have their own Kernel, take up RAM, and CPU

- Boot in a few seconds

- Simple starting up a few process (copy-on-write Jerome)

- Better resource sharing: Faster context switching, direct path to I/O

Enter Docker and other Container runtime APIs

Containers are still pretty low level, so docker is a nice API that will create and mange your containers for you. As far as I understand, Docker can be used with different container backends, i.e. OpenVZ, or Solaris Zones. Boyd gave a nice docker tutorial on day 2 docker 101

Docker gives you commands for running / managing containers:

docker startdocker stopDockerfile from Byod's training

FROM odise/busybox-python

WORKDIR /code

RUN easy_install redis && easy_install flask

ADD . /code # mounts the current dir '.' to the '/code' path inside the container (shared dir with the host)

CMD python app.py

There are other container runtime APIs apart from Docker, i.e. CoreOS Rocket

OK so now we have Containers what else do we need?

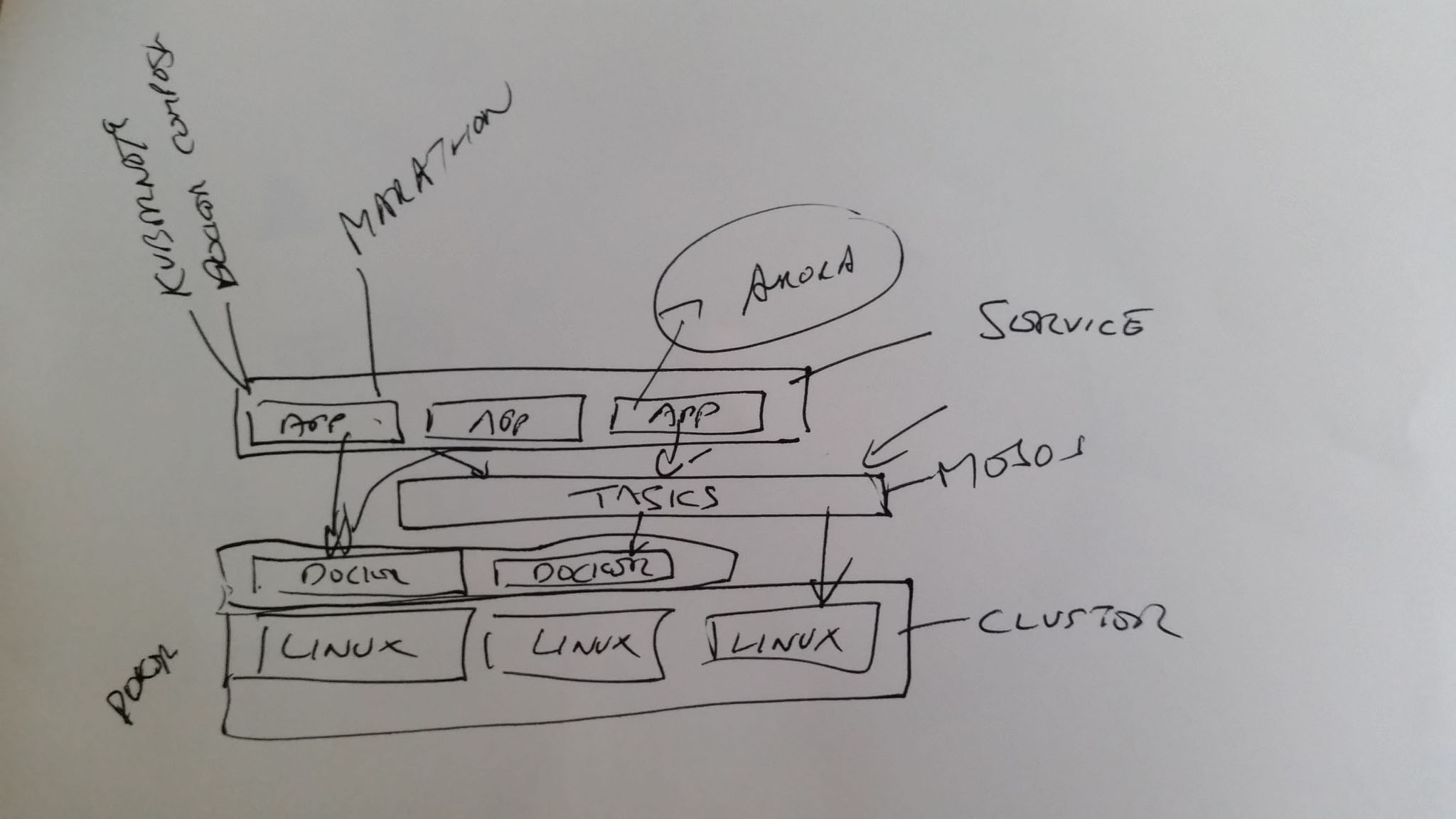

Task Schedulers, i.e Mesos

- Mesos - task scheduler allowing you to effectively utilize your resources. You need a task run, i.e. web app says I need X CPU, Y filehandlers sends request to Master, master forwards it to Scheduler, scheduler responses with what is available and where (on which machines), the initiating machine get the response and goes and runs its task there. see Joe's talk

Shared Repositories - Quay.io

Once you have your container image you can push it to a shared repository where other developers can gain access to it. For example the docker API has commands to tag your container and push it to share repository.

$ docker tag mysmallapp:latest quay.io/{quayusername}/myquayapp:1.0

$ docker push quay.io/{quayusername/myquayapp:1.0

Service Level Application Manager:

Mesosphere, Kubernetes, Marathon, Arora

As as service Tutum

- Manages your docker deploys

- Manages tasks for you via mesos

- Health Checks

- Monitoring / Logging